Prerequisite

If you have internet facing VMs, particularly 2 of them, as a manager and a worker, and your own domain, that would be great. You can also do this with locally hosted VMs on internal network and self-signed certificate, but you would miss the Let’s Encrypt SSL configuration part. I’m using two Ubuntu 18.04 Vms to host the containers, hosted on Azure. Make sure port 443 is open on both VMs. Additionally, Let’s Encrypt validation process require access to port 80, so open it on the first VM only

I’ll be using apache tomcat to simulate the app we’ll be deploying on the cluster. You can get a sample of war file to deploy on the cluster here

Preparing the host

Make sure both VMs can talk to each other. For this particular article, create these folders on each of the VM:

- /app/webapps

- /app/conf

- /app/logs

- /app/certs

Next, install Docker on both VMs with

surfer@M5Dock02:~$ sudo apt install docker docker.io

SSL Certificate

If you’re using locally hosted VMs, create a self-signed certificate. If you have your own domain, and would like to use Let’s Encrypt, we need to install the certbot. On the first server, start by adding the repo

surfer@M5Dock01:~$ sudo add-apt-repository ppa:certbot/certbot

and after your server finished updating the repo database, we can install certbot:

surfer@M5Dock01:~$ sudo apt install certbot

..and request a certificate for our domain

sudo certbot certonly --standalone --preferred-challenges http -d dockers.ready.web.id

Follow the instruction, and let’s Encrypt validates your ownership of the domain you’re registering via port 80. If successful, our certificate should be ready at

/etc/letsencrypt/live/dockers.ready.web.id/

Replace my domain with your domain, of course. Inside the directory, you’ll find:

root@M5Dock01:/etc/letsencrypt/live/dockers.ready.web.id# ls -la total 12 drwxr-xr-x 2 root root 4096 Jun 4 04:41 . drwx------ 3 root root 4096 Jun 4 04:41 .. -rw-r--r-- 1 root root 692 Jun 4 04:41 README lrwxrwxrwx 1 root root 44 Jun 4 04:41 cert.pem -> ../../archive/dockers.ready.web.id/cert1.pem lrwxrwxrwx 1 root root 45 Jun 4 04:41 chain.pem -> ../../archive/dockers.ready.web.id/chain1.pem lrwxrwxrwx 1 root root 49 Jun 4 04:41 fullchain.pem -> ../../archive/dockers.ready.web.id/fullchain1.pem lrwxrwxrwx 1 root root 47 Jun 4 04:41 privkey.pem -> ../../archive/dockers.ready.web.id/privkey1.pem

HAProxy works with a single .pem file that combines the certificate and the key. To create such file from what we have from Let’s Encrypt, do:

DOMAIN='dockers.ready.web.id' sudo -E bash -c 'cat /etc/letsencrypt/live/$DOMAIN/fullchain.pem /etc/letsencrypt/live/$DOMAIN/privkey.pem > /app/certs/$DOMAIN.pem'

Our /app/certs directory should now contain a single .pem file. We can now close access to port 80 on the server as we no longer need it.

Preparing Apache Tomcat

There are a couple of items we need to prepare for our tomcat deployment. Do this on both VMs. First the war file. Simply put your .war file to /app/webapps and we are done.

Next, to secure our tomcat deployment, we need to make some minor adjustment to server.xml. Since our base tomcat docker image is using Tomcat version 8.x, get one from here, and extract server.xml from tarball archive. Modify the file by adding

<Valve className="org.apache.catalina.valves.ErrorReportValve" showReport="false" showServerInfo="false" />

Just before the </Host> tag. Move the server.xml file to /app/conf. The snippet above will tell our tomcat service to hide server version when displaying error pages

To recap, these are the contents of our directories so far:

surfer@M5Dock01:~$ ls -la /app/webapps/ total 16 drwxrwxrwx 2 root root 4096 Jun 7 10:54 . drwxr-xr-x 6 root root 4096 Jun 7 09:31 .. -rw-rw-r-- 1 surfer surfer 4606 Jun 7 08:57 sample.war surfer@M5Dock01:~$ ls -la /app/certs/ total 16 drwxr-xr-x 2 root root 4096 Jun 7 10:28 . drwxr-xr-x 6 root root 4096 Jun 7 09:31 .. -rw-r--r-- 1 root root 5274 Jun 4 04:56 dockers.ready.web.id.pem surfer@M5Dock01:~$ ls -la /app/conf/ total 16 drwxr-xr-x 2 root root 4096 Jun 7 09:39 . drwxr-xr-x 6 root root 4096 Jun 7 09:31 .. -rw------- 1 root root 7657 Jun 7 09:39 server.xml surfer@M5Dock01:~$ ls -la /app/logs total 8 drwxr-xr-x 2 root root 4096 Jun 7 10:57 . drwxr-xr-x 6 root root 4096 Jun 7 09:31 .

Configuring Docker

Go into the first server and initiate it as swarm manager with:

surfer@M5Dock01:~$ sudo docker swarm init --advertise-addr the.manager.ip.address

Replace “the.manager.ip.address” with the ip of the manager node. The output should be similar to this:

Swarm initialized: current node (nl2a6qenr7l869o3orl2qvaw5) is now a manager. To add a worker to this swarm, run the following command: docker swarm join --token SWMTKN-1-2q13xezxtl6ycm5fejjasccd9cznn2cicjt1bf6venba8wi1w2-a6y71bozl1j25ft76c8uhqup9 the.manager.ip.address:2377 To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions.

Use the output above to initiate the second server as worker node

surfer@M5Dock02:~$ sudo docker swarm join --token SWMTKN-1-2q13xezxtl6ycm5fejjasccd9cznn2cicjt1bf6venba8wi1w2-a6y71bozl1j25ft76c8uhqup9 the.manager.ip.address:2377

if you see this line:

This node joined a swarm as a worker.

..then we have completed our Docker Swarm setup. You can check them with:

surfer@M5Dock01:~$ sudo docker node ls ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION nl2a7qe7r7l8k9oeors2qvaw5 * M5Dock01 Ready Active Leader 18.09.5 tfrb5729q22gd235wekh6ar3a M5Dock02 Ready Active 18.09.5

Now, on to composing our deployment

Composing and Deploying

So this is what we are going to do:

The cluster is comprised of a couple of items, which are:

- Multiple tomcat container, serving the application

- A single DockerCloud HAProxy container serving as load balancer

- An Overlay network where all of these components reside

This is how my Docker Compose yaml looks like:

surfer@M5Dock01:~$ more docker-compose.yml version: '3' services: tcserv: image: tomcat ports: - 8080 environment: - SERVICE_PORTS=8080 volumes: - /app/webapps:/usr/local/tomcat/webapps/ - /app/logs:/usr/local/tomcat/logs - /app/conf/server.xml:/usr/local/tomcat/conf/server.xml deploy: replicas: 10 update_config: parallelism: 5 delay: 10s restart_policy: condition: on-failure max_attempts: 3 window: 120s networks: - web proxy: image: dockercloud/haproxy depends_on: - tcserv environment: - BALANCE=leastconn - CERT_FOLDER=/host-certs/ - VIRTUAL_HOST=https://* - RSYSLOG_DESTINATION=10.0.0.6:514 - EXTRA_GLOBAL_SETTINGS=ssl-default-bind-options no-tlsv10 volumes: - /var/run/docker.sock:/var/run/docker.sock - /app/certs:/host-certs ports: - 443:443 networks: - web deploy: placement: constraints: [node.role == manager] networks: web: driver: overlay

The first service, named tcserv hosts the apache tomcat configuration. It is based on the official tomcat image. Directory /app/webapps and /app/logs is mounted on each container spawned on the service, making all of them share the war hosted on the webapps directory and write logs to /app/logs each of the corresponding VMs, and each of thd tomcat services will use the same server.xml configuration, hosted on /app/conf. Initially, the swarm will generate 10 replicas of the tomcat container. Port 8080 is only served through the docker network “web” and is not exposed to the outside world.

The second service is called proxy, and a single container will be deployed and reside on the manager host. It has the tcserv service as dependency. It will use least connection protocol to distribute requests, and will use SSL certificate located on /app/certs. The “ssl-default-bind-options no-tlsv10” line ensure the container only serve TLS v1.1 or newer

Both services are connected to each other through docker network that we name “web”. Since it span multiple nodes, it’s using the overlay driver.

To initiate the deployment, do

surfer@M5Dock01:~/labs/dockers/ssl-termination$ sudo docker stack deploy --compose-file=docker-compose.yml appThe swarm will populate both manager and worker nodes with containers. To see the distribution, do:

surfer@M5Dock01:~$ sudo docker node ls ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION zco5lu92ozm10igs78tvviv23 * M5Dock01 Ready Active Leader 18.09.5 uqqw3e2bbmxh7ch6ewocrzhz3 M5Dock02 Ready Active 18.09.5 surfer@M5Dock01:~$ sudo docker node ps zco5lu92ozm10igs78tvviv23 ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS cme0ee2cpoep app_proxy.1 dockercloud/haproxy:latest M5Dock01 Running Running 8 minutes ago g5huakpaferm app_tcserv.1 tomcat:latest M5Dock01 Running Running 8 minutes ago idx4fxnx39zz app_tcserv.4 tomcat:latest M5Dock01 Running Running 8 minutes ago gpsna8nqjkwm app_tcserv.6 tomcat:latest M5Dock01 Running Running 8 minutes ago sqj1pnb0lqeg app_tcserv.7 tomcat:latest M5Dock01 Running Running 8 minutes ago zrcshoxuu3n3 app_tcserv.10 tomcat:latest M5Dock01 Running Running 8 minutes ago surfer@M5Dock01:~$ sudo docker node ps uqqw3e2bbmxh7ch6ewocrzhz3 ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS lv1vhv53h1zo app_tcserv.2 tomcat:latest M5Dock02 Running Running 8 minutes ago d5hezt78jf5e app_tcserv.3 tomcat:latest M5Dock02 Running Running 8 minutes ago x6qzsgc3n0um app_tcserv.5 tomcat:latest M5Dock02 Running Running 8 minutes ago 7e52yyzjawwu app_tcserv.8 tomcat:latest M5Dock02 Running Running 8 minutes ago hh3z5vul9d7d app_tcserv.9 tomcat:latest M5Dock02 Running Running 8 minutes ago

As you can see, 5 containers are hosted on the first VM, while another 5 reside on the second. Now, let’s check whether the app is accessible through browser:

Next, let’s see whether HAProxy configuration on the container is handling all of the available Tomcat containers:

surfer@M5Dock01:~$ sudo docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES a2bb3724406a dockercloud/haproxy:latest "/sbin/tini -- docke…" About an hour ago Up About an hour 80/tcp, 443/tcp, 1936/tcp app_proxy.1.cme0ee2cpoepddv434k2gdnm7 316beeb57001 tomcat:latest "catalina.sh run" About an hour ago Up About an hour 8080/tcp app_tcserv.1.g5huakpafermjjij70072mibv f67e6980a9ec tomcat:latest "catalina.sh run" About an hour ago Up About an hour 8080/tcp app_tcserv.4.idx4fxnx39zzj1l2be6cjuwmu bd65a4f87d0e tomcat:latest "catalina.sh run" About an hour ago Up About an hour 8080/tcp app_tcserv.10.zrcshoxuu3n3nesd0fpoh81gr 52f9f0adb6f5 tomcat:latest "catalina.sh run" About an hour ago Up About an hour 8080/tcp app_tcserv.6.gpsna8nqjkwmznpnmyoiugr0u f395471459f6 tomcat:latest "catalina.sh run" About an hour ago Up About an hour 8080/tcp app_tcserv.7.sqj1pnb0lqeg1xnn7mkgmyhh3

and let us check the current HAProxy configuration. Do

surfer@M5Dock01:~$ sudo docker exec -ti a2bb3724406a /bin/sh

When you are logged in to HAProxy container, do:

# more haproxy.cfg

And look at the backend portion of the config file:

backend default_service server app_tcserv.1.g5huakpafermjjij70072mibv 10.0.0.3:8080 check inter 2000 rise 2 fall 3 server app_tcserv.10.zrcshoxuu3n3nesd0fpoh81gr 10.0.0.6:8080 check inter 2000 rise 2 fall 3 server app_tcserv.2.lv1vhv53h1zoq04i9hp6dy6ak 10.0.0.7:8080 check inter 2000 rise 2 fall 3 server app_tcserv.3.d5hezt78jf5e1lq0z0d4fmwel 10.0.0.8:8080 check inter 2000 rise 2 fall 3 server app_tcserv.4.idx4fxnx39zzj1l2be6cjuwmu 10.0.0.9:8080 check inter 2000 rise 2 fall 3 server app_tcserv.5.x6qzsgc3n0umdp7yp1son3pd6 10.0.0.10:8080 check inter 2000 rise 2 fall 3 server app_tcserv.6.gpsna8nqjkwmznpnmyoiugr0u 10.0.0.4:8080 check inter 2000 rise 2 fall 3 server app_tcserv.7.sqj1pnb0lqeg1xnn7mkgmyhh3 10.0.0.5:8080 check inter 2000 rise 2 fall 3 server app_tcserv.8.7e52yyzjawwufxhrf4ws8s3g2 10.0.0.11:8080 check inter 2000 rise 2 fall 3 server app_tcserv.9.hh3z5vul9d7desnnhn9vvi6ap 10.0.0.12:8080 check inter 2000 rise 2 fall 3/

As you can see, the HAProxy container is currently load balancing 10 containers. Now let’s crank it up

surfer@M5Dock01:~$ sudo docker service scale app_tcserv=20

Wait until everything settle

app_tcserv scaled to 20 overall progress: 20 out of 20 tasks 1/20: running [==================================================>] 2/20: running [==================================================>] 3/20: running [==================================================>] 4/20: running [==================================================>] 5/20: running [==================================================>] 6/20: running [==================================================>] 7/20: running [==================================================>] 8/20: running [==================================================>] 9/20: running [==================================================>] 10/20: running [==================================================>] 11/20: running [==================================================>] 12/20: running [==================================================>] 13/20: running [==================================================>] 14/20: running [==================================================>] 15/20: running [==================================================>] 16/20: running [==================================================>] 17/20: running [==================================================>] 18/20: running [==================================================>] 19/20: running [==================================================>] 20/20: running [==================================================>] verify: Service converged

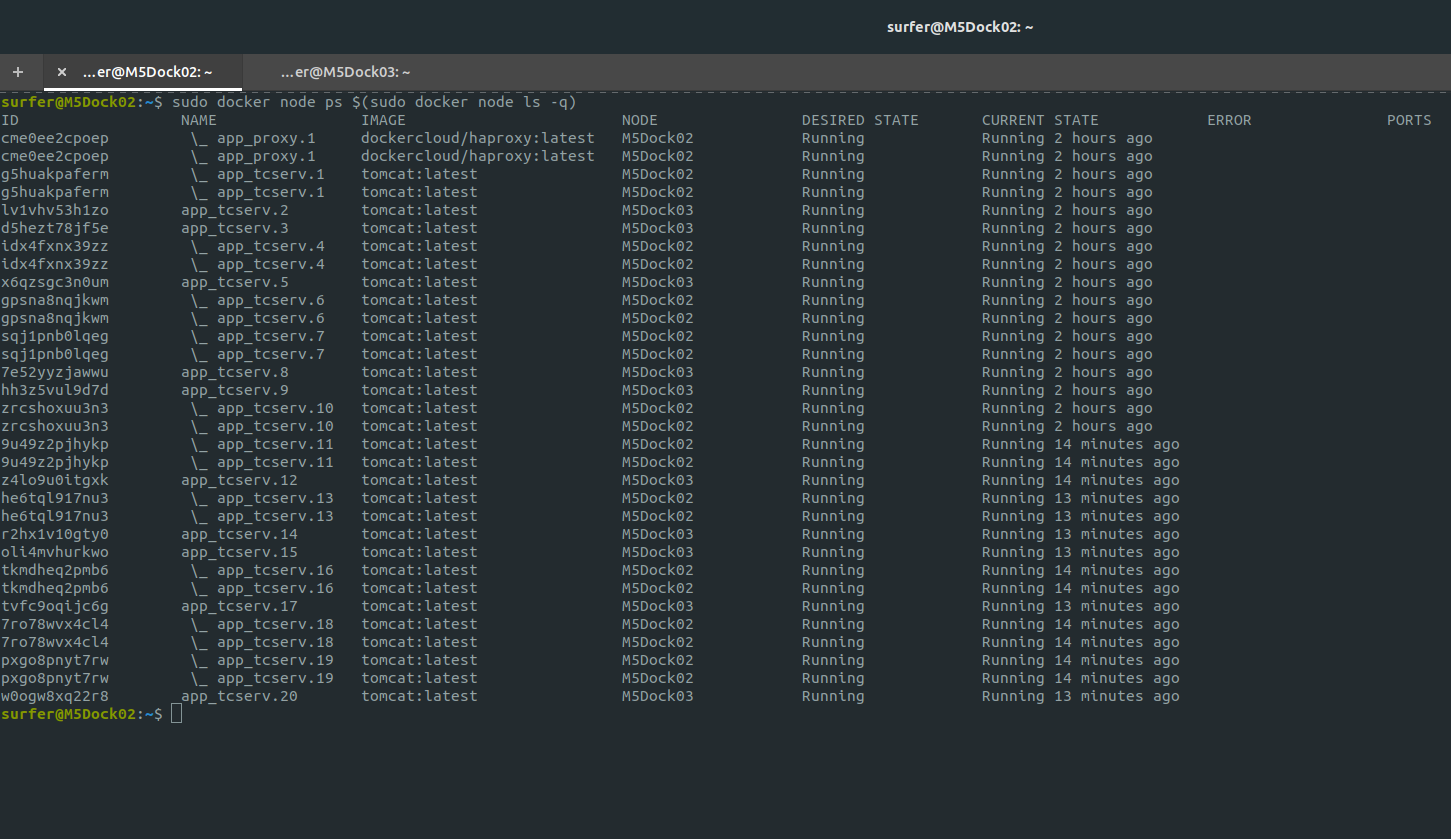

Let’s look at the distribution after the tomcat service is scaled to 20 containers

surfer@M5Dock02:~$ sudo docker node ps zco5lu92ozm10igs78tvviv23 ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS cme0ee2cpoep app_proxy.1 dockercloud/haproxy:latest M5Dock01 Running Running 2 hours ago g5huakpaferm app_tcserv.1 tomcat:latest M5Dock01 Running Running 2 hours ago idx4fxnx39zz app_tcserv.4 tomcat:latest M5Dock01 Running Running 2 hours ago gpsna8nqjkwm app_tcserv.6 tomcat:latest M5Dock01 Running Running 2 hours ago sqj1pnb0lqeg app_tcserv.7 tomcat:latest M5Dock01 Running Running 2 hours ago zrcshoxuu3n3 app_tcserv.10 tomcat:latest M5Dock01 Running Running 2 hours ago 9u49z2pjhykp app_tcserv.11 tomcat:latest M5Dock01 Running Running 2 minutes ago he6tql917nu3 app_tcserv.13 tomcat:latest M5Dock01 Running Running 2 minutes ago tkmdheq2pmb6 app_tcserv.16 tomcat:latest M5Dock01 Running Running 2 minutes ago 7ro78wvx4cl4 app_tcserv.18 tomcat:latest M5Dock01 Running Running 2 minutes ago pxgo8pnyt7rw app_tcserv.19 tomcat:latest M5Dock01 Running Running 2 minutes ago surfer@M5Dock02:~$ sudo docker node ps uqqw3e2bbmxh7ch6ewocrzhz3 ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS lv1vhv53h1zo app_tcserv.2 tomcat:latest M5Dock02 Running Running 2 hours ago d5hezt78jf5e app_tcserv.3 tomcat:latest M5Dock02 Running Running 2 hours ago x6qzsgc3n0um app_tcserv.5 tomcat:latest M5Dock02 Running Running 2 hours ago 7e52yyzjawwu app_tcserv.8 tomcat:latest M5Dock02 Running Running 2 hours ago hh3z5vul9d7d app_tcserv.9 tomcat:latest M5Dock02 Running Running 2 hours ago z4lo9u0itgxk app_tcserv.12 tomcat:latest M5Dock02 Running Running 2 minutes ago r2hx1v10gty0 app_tcserv.14 tomcat:latest M5Dock02 Running Running 2 minutes ago oli4mvhurkwo app_tcserv.15 tomcat:latest M5Dock02 Running Running 2 minutes ago tvfc9oqijc6g app_tcserv.17 tomcat:latest M5Dock02 Running Running 2 minutes ago w0ogw8xq22r8 app_tcserv.20 tomcat:latest M5Dock02 Running Running 2 minutes ago

As you can see, all 20 containers are all distributed properly to the two nodes. Let’s see whether the HAProxy configuration has been updated:

backend default_service server app_tcserv.1.g5huakpafermjjij70072mibv 10.0.0.3:8080 check inter 2000 rise 2 fall 3 server app_tcserv.10.zrcshoxuu3n3nesd0fpoh81gr 10.0.0.6:8080 check inter 2000 rise 2 fall 3 server app_tcserv.11.9u49z2pjhykp41ivoqyl1a9t9 10.0.0.21:8080 check inter 2000 rise 2 fall 3 server app_tcserv.12.z4lo9u0itgxkxp8ljjvq8tcr4 10.0.0.17:8080 check inter 2000 rise 2 fall 3 server app_tcserv.13.he6tql917nu3w4nidcj0334hm 10.0.0.22:8080 check inter 2000 rise 2 fall 3 server app_tcserv.14.r2hx1v10gty09t8q820h0i8xo 10.0.0.18:8080 check inter 2000 rise 2 fall 3 server app_tcserv.15.oli4mvhurkwo53019drsme7sw 10.0.0.23:8080 check inter 2000 rise 2 fall 3 server app_tcserv.16.tkmdheq2pmb6jmx0pdq5jdf6s 10.0.0.19:8080 check inter 2000 rise 2 fall 3 server app_tcserv.17.tvfc9oqijc6gjepaje5blolt2 10.0.0.24:8080 check inter 2000 rise 2 fall 3 server app_tcserv.18.7ro78wvx4cl40keks8yamnn3w 10.0.0.25:8080 check inter 2000 rise 2 fall 3 server app_tcserv.19.pxgo8pnyt7rwzi62ob3vux1a1 10.0.0.20:8080 check inter 2000 rise 2 fall 3 server app_tcserv.2.lv1vhv53h1zoq04i9hp6dy6ak 10.0.0.7:8080 check inter 2000 rise 2 fall 3 server app_tcserv.20.w0ogw8xq22r8ts65hi1ko3zhc 10.0.0.26:8080 check inter 2000 rise 2 fall 3 server app_tcserv.3.d5hezt78jf5e1lq0z0d4fmwel 10.0.0.8:8080 check inter 2000 rise 2 fall 3 server app_tcserv.4.idx4fxnx39zzj1l2be6cjuwmu 10.0.0.9:8080 check inter 2000 rise 2 fall 3 server app_tcserv.5.x6qzsgc3n0umdp7yp1son3pd6 10.0.0.10:8080 check inter 2000 rise 2 fall 3 server app_tcserv.6.gpsna8nqjkwmznpnmyoiugr0u 10.0.0.4:8080 check inter 2000 rise 2 fall 3 server app_tcserv.7.sqj1pnb0lqeg1xnn7mkgmyhh3 10.0.0.5:8080 check inter 2000 rise 2 fall 3 server app_tcserv.8.7e52yyzjawwufxhrf4ws8s3g2 10.0.0.11:8080 check inter 2000 rise 2 fall 3 server app_tcserv.9.hh3z5vul9d7desnnhn9vvi6ap 10.0.0.12:8080 check inter 2000 rise 2 fall 3

As you can see, HAProxy configuration has been updated properly. So what next? There are a couple of things that we can do:

- Adding additional nodes, and

- Improve service resiliency with multiple manager nodes

Questions? Thoughts? Please share yours on the comment section